Next video interview by Lex Fridman I have watched is the interview with Tomaso Poggio, video below.

Tomaso Poggio is a professor at MIT and is the director of the Center for Brains, Minds, and Machines. Cited over 100,000 times, his work has had a profound impact on our understanding of the nature of intelligence, in both biological neural networks and artificial ones. He has been an advisor to many highly-impactful researchers and entrepreneurs in AI, including Demis Hassabis of DeepMind, Amnon Shashua of MobileEye, and Christof Koch of the Allen Institute for Brain Science. ~ Description from Lex Fridman website

Thought Number 1: Study of Neuroscience to Build Better AI

At the start of the interview, there was discussion on the recent development of Artificial Intelligence. The gist of the discussion was much of the breakthrough in Artificial Intelligence was result from the study of neuroscience. The knowledge learnt was transfered over to building better Artificial Intelligence. There is a very strong link between the two fields and to continuously build better AI, we need to extend our study of the brain, to understand it better.

There is a good example to show how AI researchers are using neuroscience to build better AI. If you have followed, Yoshua Bengio's keynote last year at NeurIPs 2019, you will know that he suggested there is a need to create a System 1 and System 2 to improve the current level of AI (video), referring to Daniel Kahneman's book, "Thinking, Fast & Slow" (My book review here.)

If anyone can introduce me good learning resource on Neuroscience, please let me know. :)

Thought Number 2: Current Downside to AI (for now)

Currently, AI is learning through labelled data and requires tons of data to learn. That is, to a large extent, very different from the Human Intelligence that AI researchers are building towards, because babies do not need that much data to know whether an animal is a cat. They look at a few pictures is able to form a representation/cognitive model of a cat, they further improve their cognitive model with feedback from teachers and parents. Million dollar question: "How can we build an AGI that requires very few data points and still be accurate?"

Thought Number 3: Brain is Modular & Flexible

This point was very interesting to me. In the interview, there was a discussion point "What is the challenge between transfering what we learnt from neuroscience to building artificial intelligence?"

Tomaso pointed this out. The brain is actually messy and modular at the same time. Through MRI we can see certain parts of the brain "light up" when undertaking certain cognitive tasks, which means the brain is modular. But at the same time, if a part of the brain is affected, other parts can come in take up the cognitive task.

Compared to building an artificial intelligence, researchers can take apart and reconfigure both the hardware and software as it deems fit. So transferring the learning from neuroscience to artificial intelligence may not be so straightforward since the brain cannot be taken apart for study so easily, to reverse engineer, even though the process of building artificial intelligence provides flexibility in configuration.

Though Number 4: Neuroscience of Ethics

Continuing on the theme of linking neuroscience to building better artificial intelligence, there was this discussion

Can we build Ethics into Artificial Intelligence?

In the discussion, Tomaso mentioned that certain parts of the brain do light up when a human is in a moral dilemma. By studying that part of the brain, there might be a chance to build Ethical Machines but this might take more time as stated in Thought Number 3.

Yes, given the above discovery there might be a chance to build Ethical Machines but I am of the opinion that a quick-win will be to focus on teaching Ethics to AI professionals first, to make them aware that, their work has an impact to society, to always keep that in mind on how to use Artificial Intelligence. Blog post here for more details. :)

Side note: Check out this article on Mercedes self-driving car.

Thought Number 5: Building AGI-what is a good test?

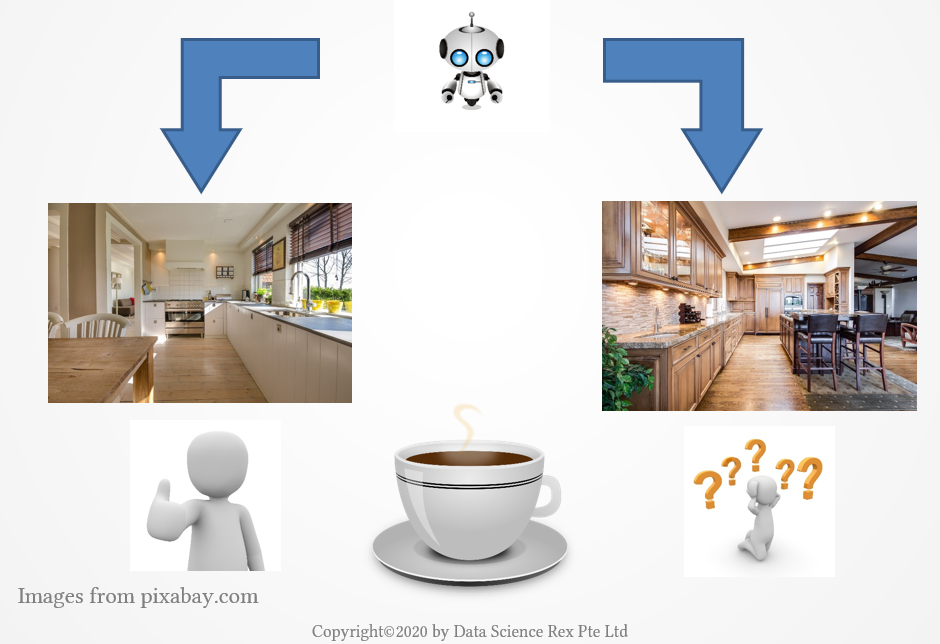

This is interesting because as we progress in our research, many have started to question, "Can Turing Test really be used?" Others have proposed their own tests, for instance, "Coffee Test". The "Coffee Test" is where an AI can make coffee very well in Kitchen A. When you move the same AI to Kitchen B, with very different layout to Kitchen A, it can still make a decent coffee. (I cannot remember who proposed that test, but I read it from "Architects of Intelligence" by Martin Ford)

So in their discussion, the best way determine if a Strong AI is built is through scene interpretation, how good the AI is able to interpret different scenes. This reminded me of an example from Andrej Karparthy. See below.

As humans, we laughed immediately when we see the above picture. This is because in our mind, we are able to put together a lot of knowledge to form a representation/cognitive model of the picture. But to an Artificial Intelligence, it has to understand a list of information and points in order to derive "Funny". For a list of the points, check out Andrej website here.

Coming back, I recently read the paper by Gary Marcus, a proponent that we need to work towards a hybrid model, connectionist AI together with symbolic AI. The paper is a strongly recommended read from me, if you are interested in building AGI (aka Strong AI). Here is the paper. In the paper, Gary did point out how we should build the symbolic part and he made many interesting points about the current research state of Artificial Intelligence.

Here are my learnings and thoughts so far on the interview. If you are interested to discuss about the content or any AI related questions, feel free to link up with me on LinkedIn or my Twitter (@PSkoo). Do consider signing up for my newsletter too. :)