Most of you may be familiar with Kaggle or the likes, a place where machine learning enthusiasts are competing with one another to find a machine learning model that ultimately scores very well on a SINGLE metric. A metric that is usually decided after a discussion with the data sponsor and the competition platform owner or competition organizers. There was a time where anyone with the title of Kaggle Masters was revered.

Before I continue, personally I give credit to most of the Kaggle Masters who has spent a lot of effort to chase the metrics and its not (and NEVER) easy. But on the flipped side, I have heard of stories where people call themselves "Kaggle Masters" but have actually been riding on the coat-tails of their MORE proficient, and recently a Kaggle GrandMaster was found cheating.

Point: even if they have Kaggle Master as their title, please validate if they truly earn the title.

In this blog post, I will discuss the difference between a competition versus an actual data science project. I hope through this post, talents will understand where they are inadequate and for companies looking to hire, the "Kaggle Master" title just means the person is technically very good in Machine Learning but Machine Learning itself is just a small component of a data science project in business.

Project Scoping!

Biggest challenge freshly trained data scientists have is project scoping. What do I mean? Being able to move from a Business Question to a Data Science Question (i.e. designing the solution, how machine learning can help to answer the business question). For instance, let's say a supermarket chain will like to determine who to reach out to for their marketing campaign. This is the business question.

What is the data science question? Well, most of the fresh data scientists will answer this business question needs a campaign response model thus reduced to a Classification problem. Is that the direction you are taking? Well...let me put in another perspective and that is we also need to estimate how much are they willing to spend at the supermarket, translating it to a Customer Lifetime Value problem. Think about it, chances are you will be using past purchasing history to assess the probability of purchase/response but have you looked at how likely the customer is going to spend the dollars with you and not other supermarket?

And I have not even put down other factors that might affect the scope, for instance the availability and quality of data. Kaggle, hackathons and machine learning competitions have removed this crucial step allowing all enthusiasts to jump straight into the machine learning part.

Data Collection

Do you know in an actual Data Science project, you will need to fight (figuratively speaking) to get your data? Most organizations data are arranged in silos thus there are "fiefdom" you need to conquer to gain the data required? You have it lucky in the competition, the data is cleaned and handed over to you in a silver platter.

In the actual situation, data collection is challenging, not only the technical aspect (data management & data governance) but also the human aspect as well. For the technical aspect, there is a cost to collecting and storing data, thus data scientist need to justify the cost and it is not easy at all. For the human aspect, data science is very new and most layman do not know what to expect of it. They only see that ceding control of data over is an action of giving up power/influence. A lot of effort will be need to convince them its for the greater good and how they can benefit from it. In a competition? Nope you do not have to worry about that. So good... :)

Target Metric

This ties back to the project scoping as well. What is the performance metric that will allow us to select the most suitable machine learning model for the business question at hand. For more information, on selecting which metrics, you can check out my other blog post (more for Classification problems).

In reality, selecting the metric to get the best model is never straightforward and need more thoughts into it. Otherwise, any changes to an implemented model will incur a large cost because the business will need to do the testing all over again. It is very painful! This brings me down to the next point.

Implementation & Deployment

Most practitioners will have heard about the Netflix Prize. Did you know that the prize was won in 2009 but the winning team's solution was used partially? Why is that so? Because in the actual competition, there is no mention of the need to consider the engineering effort. The winning model takes up much engineering effort, so much so it does not justify the additional value provided, which is the additional increase accuracy.

Again this is the difference between competition and reality. In most competition, it is a relentless pursuit of the pre-determined performance metric. There is no consideration for the engineering effort. To be fair, it will not be easy to suggest that consideration be made available, because that might expose the technology stack the company is using, which can compromise security and privacy.

To be fair, from what I read so far, the winning team in Kaggle needs to assist with the implementation so, to a certain extent, engineering effort will be taken into account.

One more thing and that is the consideration for computation complexity. Business does not function without resource constraints. There are business limitations when it comes to selecting the best model. Thus besides selecting the performance metrics to measure the effectiveness of the machine learning model, we also have to take into account how long, in reality, does the machine learning process take to come up with a decision. For example, how many of us are willing to wait for more than 5 seconds to see your Netflix fill up with shows recommendation? In this day and age, 1 second itself is considered very long.

Metric is NOT the Only Consideration

Let you in on a secret. Metric is only one of the many factors in considering which model to implement during the training/validation phase. If you have worked in financial institutions or healthcare, you will realise how Compliance & Regulations can affect the selection of the final model. For instance, the need for transparency and explain-ability means you cannot use any ensemble models at all.

Conclusion

Companies should not give too much weightage people who has won Machine Learning competition. They are good but they are still lacking experience in important areas like project scoping and business considerations unless they are able to show actual work experience. A very experienced data scientist may not be able to win competition, become a Kaggle Master, but I am very sure they can help to ensure a smooth process in turning data to value.

Kudos to those who participate and won Machine Learning competition. Its not easy and never easy but the reality is much bigger. :)

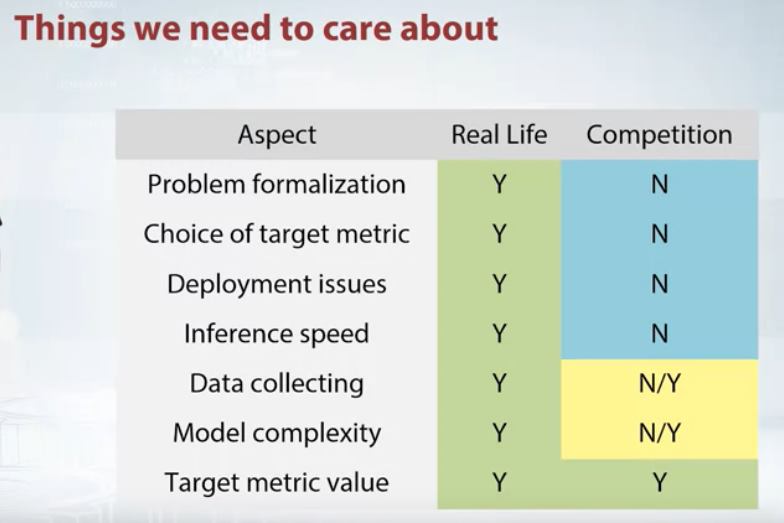

One last image to summarize what I have just discussed.

If you got any feedback, please feel free to reach out to me on LinkedIn or Twitter. And do check out my other posts. Consider subscribing to my newsletter to find out what I am thinking, doing or learning. :)