Timothy has just graduated from a Data Science degree program and started work in Lifestyle Coupon, a company that sells consumers discount coupons similar to Groupon. Lifestyle Coupons has just embarked on the journey to gain value from their data through Data Science and Artificial Intelligence. To help them in their journey, they have hired Pasiko an experienced data scientist who has been working on data for many years.

Pasiko gave Timothy, his new hire, a "warm-up" assignment and that is, to train an email marketing campaign response model. Timothy was very excited about his first work project and dived into it quickly. He tried his best to come up with the "best" machine learning model that can accurately predict which customer will respond to the campaign. His thought process was to try his very best to get a very accurate model.

After a few weeks of working on the project, Timothy has come up with the most accurate model he can train given his education. He presented his model to Pasiko.

Timothy: Pasiko, here is the model I have trained, this model should help us to determine which customer will respond to our marketing campaign. This model should help our Marketing team to reach out to the right people.

Pasiko: Ok I am looking forward to hearing it. By the way, which model performance metrics did you use to determine your final model?

Timothy: Model performance metrics? Eh...I chose the most accurate model, the highest accuracy rate. Is that the model performance metric you are referring to?

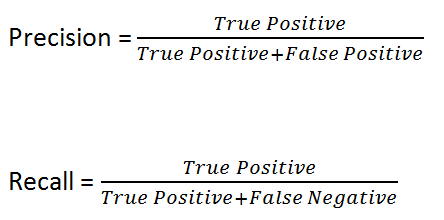

Pasiko: Well yes, Accuracy is one of them but there are many others. For instance, Precision and Recall. It looks like its time for a lesson in business reality. Can you write the formula for Accuracy on the whiteboard?

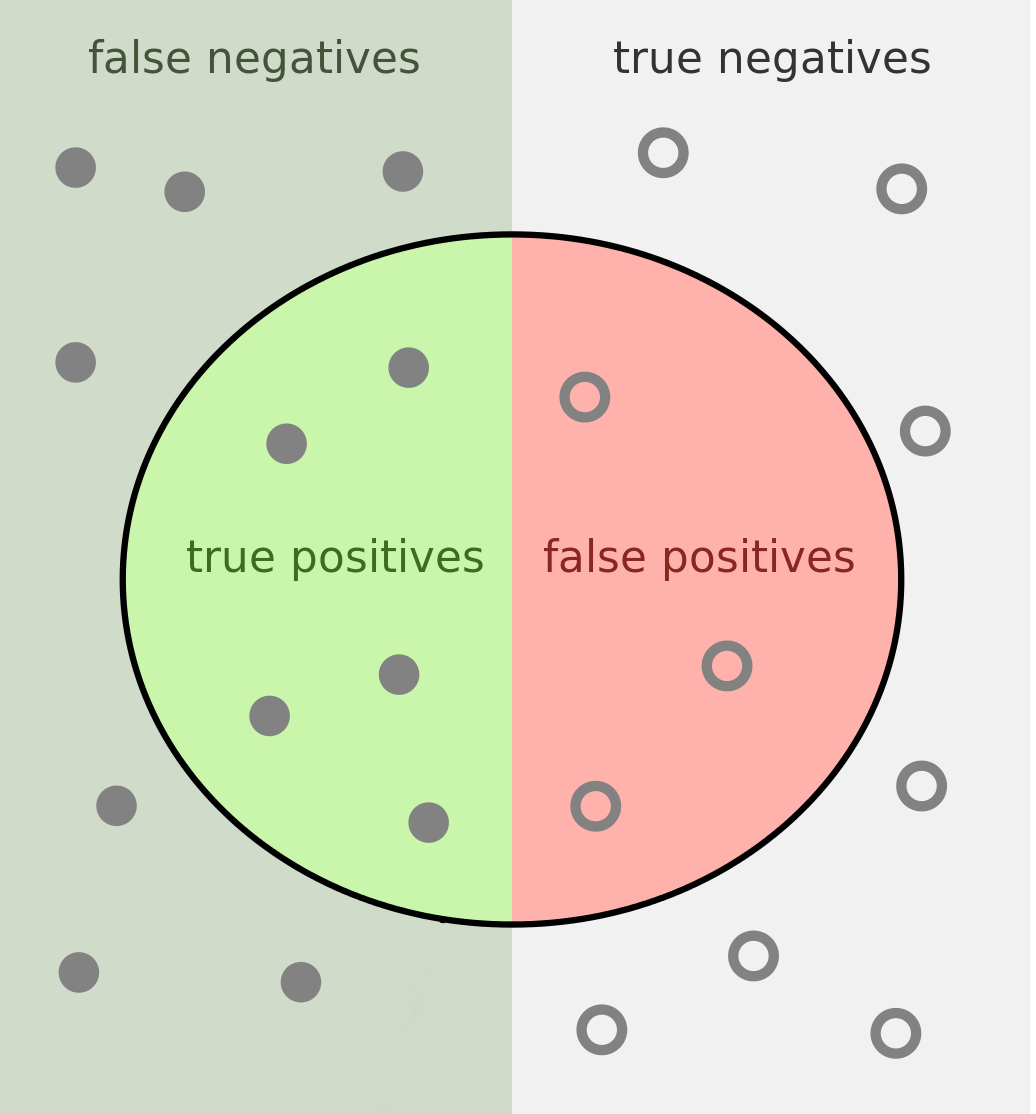

Timothy went over to the whiteboard and wrote the formula below for Accuracy.

Pasiko: Tell me, Timothy, from the formula, if we are to increase Accuracy, we should be increasing...

Timothy: True Positive and True Negative!

Pasiko: Fantastic! So if you think about it, you can have a very accurate model with a high number of True Negatives right?

In this particular case, does the business incur lesser costs/loss revenue if we have a very high True Negative? Not really, right? For True Positive it has an impact on business because the more True Positives we have, it means more revenue for the company. Thus comparing True Positive and True Negative, the company will like to have a trained model that can have as many True Positive as possible from the training data.

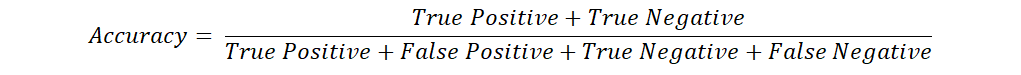

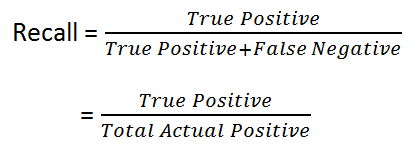

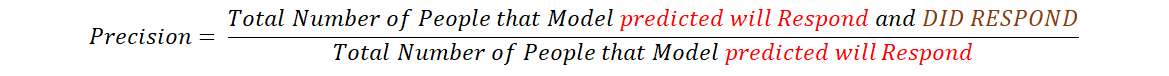

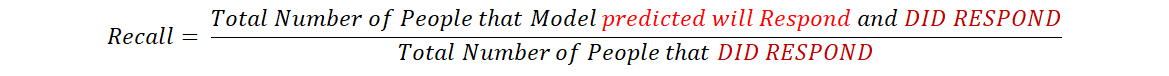

Let us now look at Precision and Recall which solely measure the True Positives. Can you write out the formula for Precision and Recall?

Timothy walks over to write out the following:

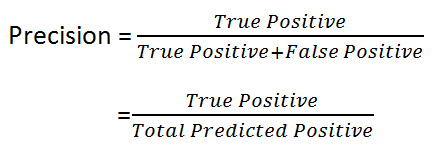

Pasiko: Timothy, if you have not done so, do check out the following blog post I came across. Here, I have WhatsApp you the URL. Moving ahead, I can write the formula you have stated in this way too.

Let us translate the above to our context.

Precision will be Total Number of People Predicted to Respond and Responded over Total Number of People whom the models say will Respond.

Think about it, after changing Precision and Recall to our context. Look at the denominator. Which group is more important to our business? It will be the group that DID RESPOND right? Those are the customer that we will focus on.

Thus which one model performance metrics should we be using? Pretty straightforward here, no?

Timothy: Wait, so the discussion so far is this.

1) True Negative and True Positive can contribute to the increase in Accuracy thus True Negative can 'distort' Accuracy, meaning we can have a high Accuracy rate and have a large proportion of True Negative. But in a business context, most of the time True Negative does not have an impact on business, no loss opportunity or revenue.

Thus we may need to consider either Precision or Recall.

2) For Precision and Recall, we need to look at the denominator to determine which group we like to focus on/more important in our analysis. And from there determine whether to use Precision or Recall.

Am I right?

Pasiko: To your first point, yes. For the second point, it can get pretty complicated as looking at the group of people and determine which is more important can be challenging to determine. Here is how I choose instead.

I asked myself the question: Which will cost more to business, False Negative or False Positive. Let us convert into our context here.

False Negative is...

Timothy: Let me try, please.

False Negative is model determine the customer is "Not Respond" but it does "RESPOND" and that is a lost revenue opportunity since we decide not to reach out.

False Positive is model determine the customer is "Respond" but it does "NOT RESPOND" and for that we create a very small disturbance when we reach out during our campaign, no lost revenue opportunity.

So in our case, we False Negative has a higher cost to business as compared to False Positive...right?

Pasiko: Exactly! So let us go back to Precision and Recall formula. Which formula has False Negative as part of the denominator? Recall right? So yes, we should be selecting the "best" model based on the Recall, or in other words, Recall should be the model performance metric we use to select the best-trained model.

Timothy: I see! Wow! I learned a lot today!

1) True Negative can distort Accuracy thus the most accurate model may not be the most suitable model for the business purpose. Since for business purposes, we are more focused on getting more True Positives rather.

2) We then assess which has a higher cost to the business, False Positive or False Negative. If it is False Positive, we go for the highest Precision. If it is False Negative, we go for the highest Recall instead.

Pasiko: Great! You have captured the essence of it. Do you know what to do next?

Timothy: YUP! I should have the other metrics value stored somewhere. Let me touch up a bit and I come back with the new model. Thanks for the guidance!

With that Timothy went back to his codes, and based on the highest Recall, selected the model and continued his analysis on it.

Author's Note

I have heard a lot of horror stories where consultants (or should I say "consultants") propose to go for the most accurate model which is the most intuitive but not necessarily the best for the circumstances. It is a very common mistake made by those that just started gaining their data science work experience. I have written a blog post before, that explains the theory, including the confusion matrix, etc. If you are interested, here is the post for more details.

I hope through this post, providing a learning example can help more people to understand that when it comes to training machine learning models, especially for the Classification type of problem, we have to evaluate which model performance metrics we should be using to choose the model suitable for the circumstances.

Thanks for making this far. I appreciate the time and attention you have spent on this post, in taking an active step for your learning.

If you found this post to be useful, please share it and do visit my LinkedIn profile to stay connected. For any feedback, you can go to my Twitter. Do consider signing up for my newsletter too. All the best in your learning journey! :)