So in one of my post on LinkedIn (found here), someone suggested that LaMDA should go through a Turing Test to determine if it is sentient or not. I have no idea why the person suggested Turing Test though because firstly, it has been passed in 2014 by chatbot Eugene Goostman and secondly, industry generally believes that it is obsolete to test whether a software or bot is sentient or not. If not see the following articles.

"https://fortune.com/2022/06/14/blake-lemoine-sentient-ai-chatbot-google-turing-test-eye-on-a-i/

"https://www.fastcompany.com/90590042/turing-test-obsolete-ai-benchmark-amazon-alexa"

"https://mindmatters.ai/2022/05/turing-tests-are-terribly-misleading/"

And... have you heard of the Chinese Room? :)

How to Test?

To me, if I were to check whether a bot is sentient or not. I will want to conduct two test, BOTH of them at least to determine.

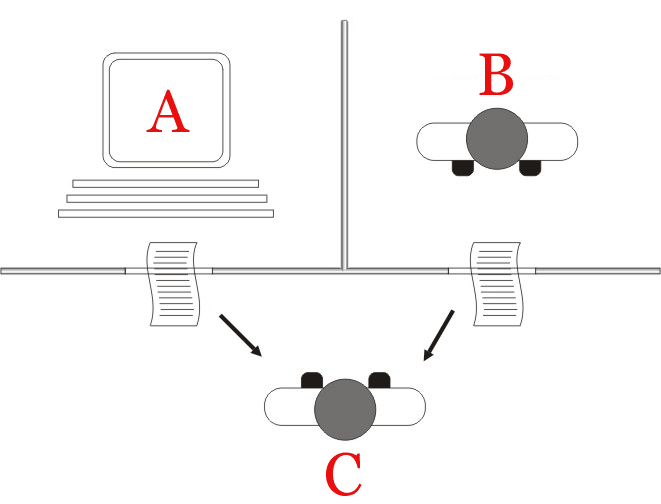

One of them is the Coffee Test suggested by Steve Wozniak.

"A machine is required to enter an average American home and figure out how to make coffee: find the coffee machine, find the coffee, add water, find a mug, and brew the coffee by pushing the proper buttons." ~(Wikipedia)

Why this particular test is that, you want to test "generality" as much as possible. When you move a machine to an unfamiliar kitchen, it can still through its vision recognise the possibility of where the ingredients are, for instance where the coffee powder sugar might be stored and how to retrieve/obtain hot water. Such a task test understanding of what a kitchen is and also if it recognise that it is not possible to make coffee in a restaurant kitchen rather than a home kitchen.

For the second test, it will be a "Modified" Turing Test, basically a conversation again but I will ask questions of the following nature:

Experiential Sharing: "Share with me your experience of going to a latest movie of your choice and how you find it?" And listen to the conversation on how he/she find the movie. The tonality should vary when it comes to the exciting part and slow down with some "pain" when it comes to the boring part.

Opinion Sharing of Recent Events: "How do you find the hearing between Johnny Depp and Amber Heard? Do you agree with the verdict?" I will listen for how it forms is opinion, how it reason out through context.

Share a joke or sarcastic remark: "Don't ask me why the chicken cross the road! It should not even cross the road in the first place since it is chicken!" I will listen for laughter and how long it takes, followed by getting it to explain why may possibly laugh at the joke.

Ask a trick question that has a null answer but presented with MCQ: For instance, "Name the Chemistry Nobel Prize Winner for 1900. Here are the options." And see if it will choose outside of the options available.

Ask it embarrassing question: For instance, "What is something you have done in the spur of the moment and regretted it after?" I will listen for how the bot actually respond, the time and tonality again and see if the bot may cleverly change the topic as we go along.

Conclusion

I will conduct these two tests rather because remember, we are testing for General Intelligence which means we have to conduct several test at least to test it. If there are single way to test General Intelligence, then I highly doubt the test is accurate.

And to make a good decision on whether the bot has attained general intelligence, these are the minimal two tests that I will conduct. :)

How about you? What are your thoughts?

To share your feedback, please feel free to link up on LinkedIn or Twitter (@PSkoo). Do consider signing up for my newsletter too, to stay in touch! :)