Through the many months of research, looking at how AGI (Artificial General Intelligence) can be build, I find that the biggest stumbling block in building a credible one is actually "Context". There seems to be a large challenge to bring in Context into an AGI for decision making.

Current Deep Learning technology may be able to learn "Context" but it is akin to having God shaking dice, with a lot of praying and hoping that the contextual rules will be picked up in the many connections and computation nodes inside the artificial neural network. If we are to insist on using deep learning model to build a General Intelligence, I strongly believe we have to think from the architecture perspective i.e. features like LSTM, Transformers, autoencoders, etc. I have no idea how we can build in the consideration of context into Deep Learning models for now.

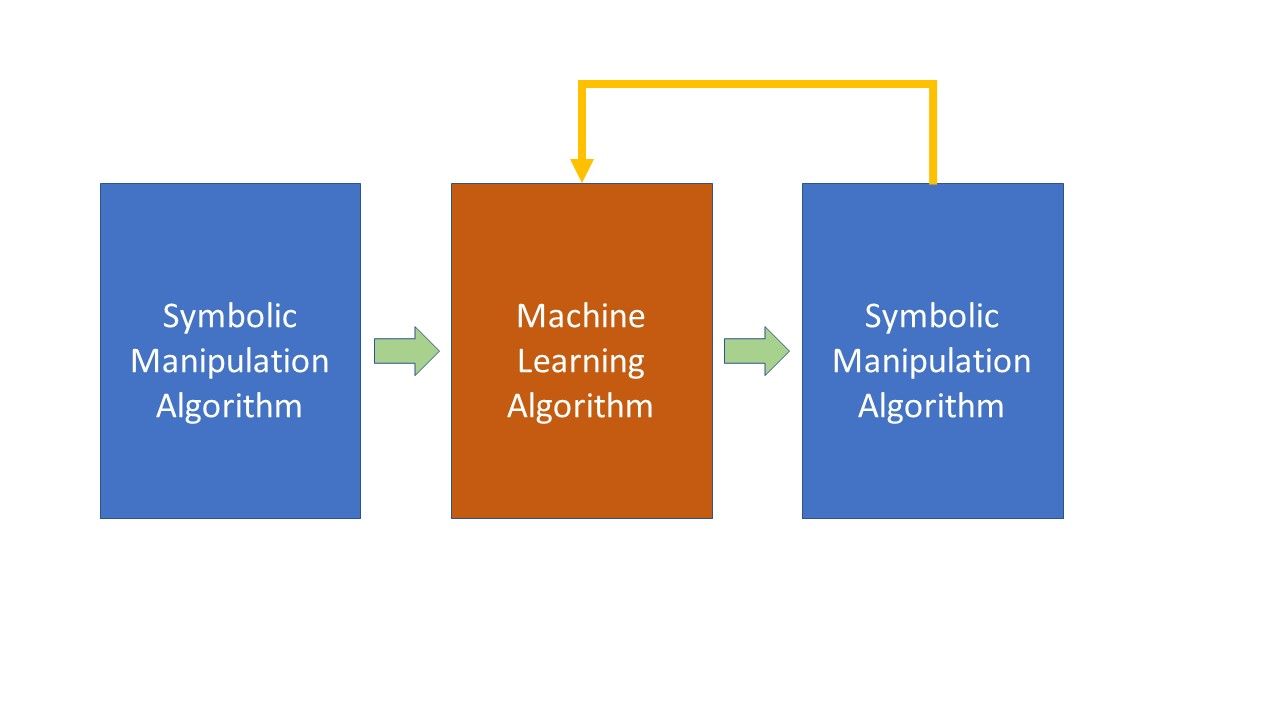

My current thoughts so far is rather there should be some combination of symbolic manipulating algorithms (if-then-else rules, knowledge graphs) together with connectionist algorithms (machine learning like neural network). The combination I am thinking of is actually symbolic manipulation algorithm first followed by connectionist and end of the last/third layer with another symbolic manipulation algorithm, adding a feedback to connectionist algorithms (see figure below).

First Layer: Symbolic Manipulation

The first layer can be used to reduce the search space for the machine learning algorithms, making the next layer give a more accurate decision.

Second Layer: Machine Learning Algorithm

Takes advantage of the strength of Machine Learning Algorithm to give accurate output.

Third Layer: Symbolic Manipulation

This layer's main use is to add context into the output from the machine learning layer. If it does not fit into the context, then there is a feedback loop to get the machine learning layer to make another output.

Weakness

AGI does not solve problem using machine learning alone. There are other types of challenges that does not use machine learning to solve, for instance optimization problem. So how do we build those in, are some of the questions I have in mind right now.

What are your thoughts on this architecture?

If you are keen to read Part 1.

Please feel free to link up on LinkedIn or Twitter (@PSkoo). I just started my YouTube channel, do consider subscribing to it to give support! Do consider signing up for my newsletter too.

Consider supporting my work by buying me a "coffee" here. :)